Neural Face Rigging for Animating and Retargeting Facial Meshes in the Wild

Abstract

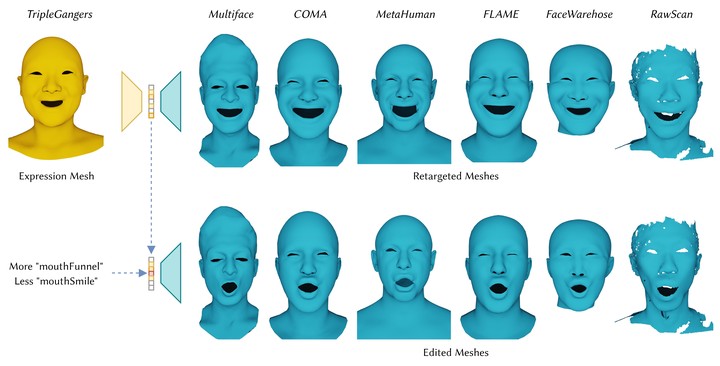

We propose an end-to-end deep-learning approach for automatic rigging and retargeting of 3D models of human faces in the wild. Our approach, called Neural Face Rigging (NFR), holds three key properties:

(i) NFR’s expression space maintains human-interpretable editing parameters for artistic controls;

(ii) NFR is readily applicable to arbitrary facial meshes with different connectivity and expressions;

(iii) NFR can encode and produce fine-grained details of complex expressions performed by arbitrary subjects.

To the best of our knowledge, NFR is the first approach to provide realistic and controllable deformations of in-the-wild facial meshes, without the manual creation of blendshapes or correspondence. We design a deformation autoencoder and train it through a multi-dataset training scheme, which benefits from the unique advantages of two data sources:a linear 3DMM with interpretable control parameters as in FACS, and 4D captures of real faces with fine-grained details. Through various experiments, we show NFR’s ability to automatically produce realistic and accurate facial deformations across a wide range of existing datasets as well as noisy facial scans in-the-wild, while providing artist-controlled, editable parameters.