Normalized Human Pose Features for Human Action Video Alignment

Abstract

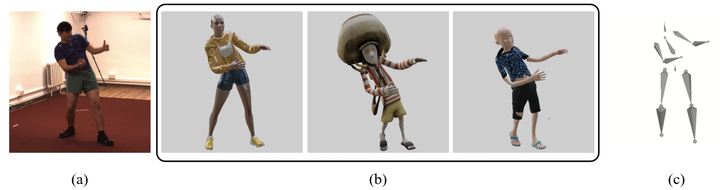

We present a novel approach for extracting human pose features from human action videos. The goal is to let the pose features capture only the poses of the action while being invariant to other factors, including video backgrounds, the video subjects’ anthropometric characteristics and viewpoints. Such human pose features facilitate the comparison of pose similarity and can be used for downstream tasks, such as human action video alignment and pose retrieval. The key to our approach is to first normalize the poses in the video frames by mapping the poses onto a pre-defined 3D skeleton to not only disentangle subject physical features, such as bone lengths and ratios, but also to unify global orientations of the poses. Then the normalized poses are mapped to a pose embedding space of highlevel features, learned via unsupervised metric learning. We evaluate the effectiveness of our normalized features both qualitatively by visualizations, and quantitatively by a video alignment task on the Human3.6M dataset and an action recognition task on the Penn Action dataset.